This morning I received news that I was elected a Nutanix Technology Champion 2016. I am very excited to be included in this group and can't wait for the exciting opportunities it will bring!

Please read the announcement here

It is only early days for me as an NTC but I already see how cool this is going to be. Nutanix has just created a NTC list on Slack which not only gives you access to other NTC but also Nutanix employees and even the CEO himself. You can also see what the NTC are up to by subscribing to the Nutanix NTC list on twitter.

Sunday, 6 December 2015

Saturday, 5 December 2015

Configuring Acropolis and create VM on Nutanix CE

Time flies when you are having fun! Back in August this year I wrote a post on installing Nutanix CE on nested ESXi with no SSD. I did not get around to do a follow up on how to configure Acropolis so that I can actually start to deploy some VM. As I currently have only one node I will not be exploring any advanced features in this post

Assuming you have your cluster up and running the next thing to do is log into your CE PRISM interface. What you will notice is that you do not have a storage pool configured

Assuming you have your cluster up and running the next thing to do is log into your CE PRISM interface. What you will notice is that you do not have a storage pool configured

- Go to Home > Storage menu

- Click create container link and add a name

- No storage pool exists yet so click the + sign

- Add a name for your pool and assign all available storage. Click Save

- I am not going to enable any of the advanced features at this stage so will just click save

- Under Storage > Storage Pools I can see in the summary that my pool and container have been created

- To create a VM we will need access to an ISO. We will store the ISO on the CVM. First step is to create another container for storing the ISO. Go Home > Storage and create container. I did set the advertised capacity to 20 Gb for this container.

- To allow the upload of an ISO we will need to allow the host that holds the ISO access to the CVM. This can be done by making use of a Filesystem Whitelist.

- Add the subnet that holds the source host and click add

- You can now mount the NFS share from a Linux or Windows host. As I have no Linux host handy I will just use Windows by enabling the Windows NFS Client first.

- We can now copy the desired iso via windows explorer to the ISO datastore on the CVM. Alternatively you can save yourself some effort and use something like WinSCP to copy the files.

- You will also need to upload the KVM Virtio drivers so that the KVM virtual hardware is properly recognized. You can download the drivers from here

- Before we go on to create the VM we will need configure some networking. Go Home > VM > Network config. Create network by specifying VLAN. I am not enabling IP address management as I want to control that part outside of Acropolis. I am assigning the native VLAN 0 ID

- Now we are ready to create VM. Go to Home > VM > Create VM. Enter a name and the desired compute configuration

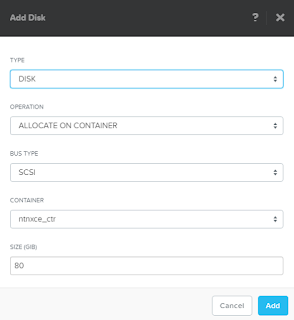

- You also will have to add a disk for your VM. Click add disk and specify configuration.

I want the disk to be stored in the ntnxce_ctr container and 80 GB should be plenty for my VM - We need to mount the ISO to the VM. Click on the CD ROM and edit settings. Ensure you specify "clone from ndfs file" and the correct path to iso

- We also need to make the VirtIO driver available to the VM. This is easiest done by adding a second CD-ROM. Click add disk and specify settings as follows

- We also need to assign a nic to the VM. Click add nic and select the VLAN you set up earlier on in the process.

- Select VM in Prism and power on. Once it has been powered on open the console.

- You are now ready to install Windows

- Once you get to the part asking where to install windows you will notice no disk is available. This is where the VirtIO driver comes in

- Click Load Driver and browse to the mounted ISO. Select the correct folder for your OS.

- Click OK. The driver should be detected. Click next.

- The drive is now detected. You can continue with install

- Once the install has been completed you will notice that no network connections are available. Go to Device Manager and check for Other devices

- Select the ethernet controller and browse to the VirtIO ISO. Update the driver

- Update the driver for the PCI device. This will install teh balloon driver

- All that is left to do for me is to assign static IP configuration as I am not letting Acropolis deal with the IP management nor do I make use of DHCP.

- We have connectivity

We know have Windows 2012 running on KVM running Nutanix CE running on ESXi 5.5 running on HP Hardware and with that we come to the end of this post.

I will definitely do another post where we go over advanced features.

I will definitely do another post where we go over advanced features.

Thursday, 22 October 2015

Nutanix Connect Chapter Champion!

I just received the exciting news that my nomination has Nutanix Connect Champion has been confirmed! It also turns out I am the first chapter leader in New Zealand.

I am looking forward for my Champion Welcome kit and UG Events kit to arrive. Not sure what to expect from it all but I am looking forward to run the local chapter. Nutanix will be providing me with the resources to making it a success.

Looks like Nutanix Connect Champion comes with quite a few benefits too! I am entitled to the following gems:

I am looking forward for my Champion Welcome kit and UG Events kit to arrive. Not sure what to expect from it all but I am looking forward to run the local chapter. Nutanix will be providing me with the resources to making it a success.

Looks like Nutanix Connect Champion comes with quite a few benefits too! I am entitled to the following gems:

- Nutanix .Next User Conference Lifetime Pass: Nutanix offers their first 25 champions lifetime free passes and I am one of the lucky 25!

- 40% .Next Discount for your entire team: My colleagues can get 40% off conference pricing any time and that is on top of the already 40% off for the early birds pricing.

- Next Unconference Early submissions to Call for Speaker Proposals: Nutanix reserve early submissions of speaker proposals to the .Next Conference Unconference track for the Chapter Champions.

- 20% Discounts on NPP/NPX, Acropolis and VDI Training for you and the chapter

- Free Nutanix Fit Check: When you purchase one fit check your company is entitled to one free Fit Check

Tuesday, 20 October 2015

High CPU Contention in vROPS

Last week I was investigating a VM that was perceived to have CPU performance issues. As always I investigate the following metrics first to rule out contention:

- CPU Ready

- Co-Stop

- Swap Wait

- I/O Wait

The values of the metrics returned were well within the acceptable range and thus did not indicated any contention. However the CPU Contention metric indicated a rather high value. I saw this pattern across most of the VM too.

So what is the CPU Contention metric all about? From what I understand it is not a granular metric but a derived metric which allows you to quickly spot that the VM is suffering from CPU contention.

You can then inspect the individual metrics I mentioned above. Just to make sure I double checked the individual CPU metrics in vROPS but nothing there that is of concern. Obviously something is not quite right so let's investigate the workload of our VM

My VM is configured with 10Ghz of CPU and its demand, indicated by the green bar, is 5 Ghz.

Usage, as indicated by the grey bar, is 2 Ghz. Demand is what is requested and usage is what is delivered. Since the VM does not get the resources that are requested we have to conclude there is contention somewhere. As I really could not find anything that pointed to contention I turned to Google and started seeing some reports that this could be caused by CPU power management policies. Some people reported they had this issue and it was fixed by disabling power management.

Worth testing it for myself....

The procedure will be different depending on your hardware. In my case it was HP hardware. The following VMware KB may come in handy.

Power management on ESXi can be managed via the host if the host bios support OS control mode.

ESX supports 4 modes of power management:

Power management on ESXi can be managed via the host if the host bios support OS control mode.

ESX supports 4 modes of power management:

- High Performance

- Low Performance

- Balanced

- Custom

As other people suggested, the high performance policy fixed their issue. This effectively disables power management. You can change this setting without disruption but you will need to reboot your host to ensure the setting is applied. Select your Host > Configuration > Hardware > Power Management. Set to High Performance

In case of HP hardware, and depending on the generation, you will need to set the power profile and power regulator too. Both of these options can be set in the BIOS and the latter can be done via ILO interface too. The power profile allows for 4 settings:

- Balanced Power and Performance (Default)

- Minimum Power Usage

- Maximum Performance

- Custom

I changed this to custom as this allows most flexibility and makes all options available.

The Power regulator allows you to configure the following profiles

- Dynamic Power Savings Mode

- Static Low Power Mode

- Static High Performance Mode

- OS Control Mode

This can be changed from either the BIOS or ILO interface. If changing in ILO you can do it at anytime but will not take affect until you reboot

I changed the option to OS control mode as it actually ensures that the processors run in their maximum power and performance mode unless you change the profile via the OS. We did set the policy to High Performance in ESXi so we have now effectively disabled all power savings.

So has all this work actually made a difference? Let's check!

In vROPS we see that our CPU Contention metric did make a difference indeed.

We did determine that "contention = demand - usage". When looking at the demand and usage under workload we can see that these are now the same which means that the VM is getting the resources it is requested.

Looks like our contention is gone. Although there were really no complaints from users in regards to performance, one colleague found that one of his VM was performing better after this change.

Although the focus of this post was on vROPS there are other ways of determining whether there is contention. In ESXtop I found no issue with the likes of %RDY and %CSTP but I did notice that the %LAT_C entry was high and it also indicated that the VM wanted (%RUN) more resources than it received (%USED). This blog post by Michael Wilmsen does a very good job explaining it in more detail.

Wednesday, 14 October 2015

One-Click upgrade to Acropolis 4.5.

I am very excited about the release of Acropolis 4.5. It has some great features I am eager to test, one of them being Azure Cloud Connect.

When I was about to upgrade I noticed that the new NOS version did not appear in the list of available upgrades.

I did notice that NCC 2.1.1 was available to upgrade which was released at the same time.

Assuming it was an issue with PRISM I did restart the PRISM service

-

$ allssh genesis stop prism

- $ cluster start

This did not fix the issue. I also noticed the same problem on another cluster and in my PRISM Central install. Time to check with the knowledgeable SRE at Nutanix :-)

I was told that the One-Click Upgrade for 4.5 is not available. Apparently this is because of the many new features introduced and they would like the customer to be fully aware of these new features before upgrading. Until it is available you can do a manual upgrade. Just upload the binary and metadata file. PRISM Central can be manually upgraded with the same binary/metadata file that you use for upgrading your clusters.

Saturday, 26 September 2015

Nutanix cmdlets and PowerCLI One Liners and then some [Updated]

I really like powershell but I never used it to its full potential. A recent change in job role sees me more involved in the day to day stuff so I started brushing up on my coding skills. I will be sharing some of the code I find useful. I still consider myself a novice so don't expect anything fancy but please share your thoughts, I would love to learn from your experiences.

VM sprawl can get seriously out of control. I recently deleted 50 VM in an environment after it appeared they were no longer required or worse, nobody knew what they were used for. Read More

This one liner lists all VM in a given protection domain

This one liner adds a VM to a protection domain

Configure the scratch partition of your ESXi host when making use of a SD install Read More

Disclaimer: Please use examples at your own risk. I take no responsibility for any possible damage to your infrastructure.

Stop a protection domain replication

This one liner returns all replications

Get-NTNXProtectionDomainReplication | Select ProtectionDomainName, ID

The above command will return the name and ID of the replication. You will need to specify these when you want to stop the replication

Abort-NTNXProtectionDomainReplication -ProtectionDomainName PD1 -ID 1234567

Using PowerCLI and vSphere tags for VM lifecycle

VM sprawl can get seriously out of control. I recently deleted 50 VM in an environment after it appeared they were no longer required or worse, nobody knew what they were used for. Read More

Find unprotected VM on your Nutanix cluster

This one liner returns which VM are currently not protected.

Get-NTNXUnprotectedVM | Select vmName

List protected VM in a protection domain

This one liner lists all VM in a given protection domain

Get-NTNXVM | where {$_.protectiondomainame -eq "My_PD"} | Select vmname

Add unprotected VM to a protection domain

This one liner adds a VM to a protection domain

Add-NTNXProtectionDomainVM -name "My_PD" -names "VM1"

Remove protected VM from a protection domain

This one liner removes a VM from a protection domain

Remove-NTNXProtectionDomainVM -name "My_PD" -input "VM1"

Configuring the scratch partition

Configure the scratch partition of your ESXi host when making use of a SD install Read More

Disclaimer: Please use examples at your own risk. I take no responsibility for any possible damage to your infrastructure.

Saturday, 19 September 2015

Nutanix Cloud Connect: Backup to AWS

One of the cool features in NOS is Nutanix Cloud Connect which allows you to integrate your on-premises Nutanix cluster with public cloud providers. At the time of writing there is only support for Amazon Web Services but I have been told support for Microsoft Azure is in the works.

Nutanix Cloud Connect is part of the Nutanix data protection functionality and therefor is as easy to manage as it was a remote Nutanix cluster. Your remote Nutanix cluster is a single ami instance in EC2. A m1.xlarge instance is automatically deployed when you configure the remote site. EBS is used to store the metadata while S3 is used for the backup storage.

One of the Nutanix clusters I maintain holds about 12 TB worth of data. Currently this is being backed up by an enterprise backup solution which relies on enterprise class storage and it turns out to be a bit expensive.

I am stating the obvious here but to get started you will need a Nutanix cluster running a NOS version that supports Cloud Connect and an AWS account. I will also assume you have a working VPN connection between your site and a VPC dedicated for Nutanix Cloud Connect services. Further more, your Nutanix cloud instance will have access to the internet so that it can access aws.amazon.com.

I have tried this configuration by making use of SSH and it works but Nutanix clearly states it is not intended for production purposes as it can lead to a 25% performance decrease.

First thing we need is to add the user and its credentials you have created in AWS.

I did run into some issues while implementing backup to AWS. On a few occasions I noticed that my transferred bandwidth came to a stand still. The first time I got around it by rebooting the CVM instance in AWS. When it occurred again I involved Nutanix support and they found that the AWS CVM was running out of memory and basically crashed the CVM. The solution was to upgrade the AWS instance to a m2.2xlarge instance.

Nutanix Cloud Connect is part of the Nutanix data protection functionality and therefor is as easy to manage as it was a remote Nutanix cluster. Your remote Nutanix cluster is a single ami instance in EC2. A m1.xlarge instance is automatically deployed when you configure the remote site. EBS is used to store the metadata while S3 is used for the backup storage.

One of the Nutanix clusters I maintain holds about 12 TB worth of data. Currently this is being backed up by an enterprise backup solution which relies on enterprise class storage and it turns out to be a bit expensive.

I am stating the obvious here but to get started you will need a Nutanix cluster running a NOS version that supports Cloud Connect and an AWS account. I will also assume you have a working VPN connection between your site and a VPC dedicated for Nutanix Cloud Connect services. Further more, your Nutanix cloud instance will have access to the internet so that it can access aws.amazon.com.

I have tried this configuration by making use of SSH and it works but Nutanix clearly states it is not intended for production purposes as it can lead to a 25% performance decrease.

AWS Configuration

User configuration

- Log into AWS and go to Access and Identity Management

- Under users, click create new users

- Enter a meaningful name such as "NutanixBackup" and ensure that the "Generate access key for each user". Store credentials in your password safe.

- Attach an access policy for this user. I have made use of the AdministratorAccess policy for this demo but you probably want to lock it down even more

Network configuration

As the emphasis here is on Nutanix Cloud Connect I will go over the network configuration at a high level. I created a dedicated VPC that will I will be using for future workloads in AWS.

Although I only have my Nutanix CVM in this subnet I have decided to make it big enough so it caters for future growth. Currently only backing up to AWS is supported but I have been told that Cloud Connect will support DR in the future which I believe interpret as bringing up VM within the cloud providers datacenter. I also created a dedicated internet gateway. The CVM instance makes use of S3 storage and does so over http so internet access is required. And finally, my routing table is populated with routes that exist in the on-prem datacenter. These routes make use of the virtual gateway that is associated with my VPC connection. I added a default route of 0.0.0.0/0 to my route table and pointed this to the internet gateway. This will ensure that the connection to S3 goes via the internet gateway.

Although I only have my Nutanix CVM in this subnet I have decided to make it big enough so it caters for future growth. Currently only backing up to AWS is supported but I have been told that Cloud Connect will support DR in the future which I believe interpret as bringing up VM within the cloud providers datacenter. I also created a dedicated internet gateway. The CVM instance makes use of S3 storage and does so over http so internet access is required. And finally, my routing table is populated with routes that exist in the on-prem datacenter. These routes make use of the virtual gateway that is associated with my VPC connection. I added a default route of 0.0.0.0/0 to my route table and pointed this to the internet gateway. This will ensure that the connection to S3 goes via the internet gateway.

Cloud Connect Configuration

Having your AWS configuration in place it is now time to configure cloud connect. You can do this either via the PRISM GUI or via the Nutanix powershell cmdlets.

Credentials configuration

- Log in to PRISM and select Data Protection from the Home menu

- On the right-hand side, choose remote site. Select AWS

- Add the credentials previously created in AWS

Remote site configuration

- Click next (as in the above screenshot)

- Set the region where you deployed CVM and the subnet will be detected

- Reset the admin password of the Nutanix CVM instance

- Click add next to the vStore Name mapping

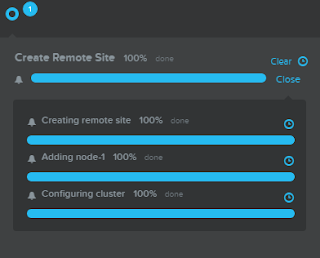

- Click create and the process will start

- It will take a while for the process to complete

- Once the install is complete you can test your connectivity to AWS. Under Data Protection > Table, Select your remote site and click test connection. All going well you should see a green tick

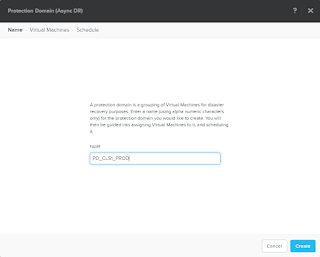

- Now that you have connectivity it is time to setup some protection domains. Click the green "+Protection Domain" and select Async DR.

- Enter a name for your protection domain and click create

- Select VM to protect

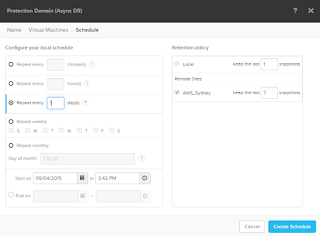

- Create a new schedule

- Set the frequency and enable your remote site. You will also need to specify your retention

Monitor your replications

- Go Home > Data Protection. Here you will see several tiles displaying active data. In this example you can see that I have 1 remote site, 2 outbound replications and I am getting speeds around the 32 MBps mark.

- Select the table link at the top. Here you see a list of all the protection domains

- Under the replication tab you will see the ongoing, pending and completed replications

I did run into some issues while implementing backup to AWS. On a few occasions I noticed that my transferred bandwidth came to a stand still. The first time I got around it by rebooting the CVM instance in AWS. When it occurred again I involved Nutanix support and they found that the AWS CVM was running out of memory and basically crashed the CVM. The solution was to upgrade the AWS instance to a m2.2xlarge instance.

Sunday, 13 September 2015

Re-image Nutanix block with ESXi

Your Nutanix block has made it all the way to the central North Island of New Zealand and you have unpacked the block, took the box for a ride around town and installed the block into the rack. Your new Nutanix block is factory shipped with the KVM hypervisor but your environment's hypervisor of choice is VMware ESXi. This is were Nutanix Foundation comes in. In a previous post I explained how to install the Nutanix Foundation VM. This post will cover how to re-image your nodes.

Once you have logged into your Foundation VM you need to click the Nutanix Foundation icon which make use of the Firefox icon.

This will take you to the Foundation GUI. This interface will walk you through the following 4 steps:

- Global Config

- Block and Node Config

- Node Imaging

- Clusters

Global Config

The global configuration screen allows you to specify settings which are common to all the nodes you are about to re-image. At the bottom you see a multi-home checkbox. This only needs to be checked when your IPMI, hypervisor and CVM are in different subnets. Since it is best practice to keep these in the same subnet it is advised you do and thus there is no reason to tick the box.

In this case you will enter the same netmask and gateway. For IPMI, enter the default ADMIN\ADMIN credentials. You can change this later. Enter your organisational DNS server under hypervisor. You probably make use of another DNS server and you can add this one later too.

The CVM memory can be adjusted as required. In my case this is 32 GB

Block and Node Config

The block and node config screen allows you to discover the new nodes. Remember that your Foundation VM needs to have an IP in the same subnet as the new nodes and that IPv6 needs to be supported. The new block and its new nodes should be automatically detected if all pre-requisites have been met. If not, you can try the discovery again by clicking the retry discovery button.

Enter the IP addresses you want to use for the IPMI, hypervisor and CVM interfaces for each discovered node. You can also set the desired name for your hypervisor host

Node Imaging

The node imaging allows you to specify the NOS package and hypervisor you want to use for re-imaging your node. You should ensure that the NOS and hypervisor version you specify is the same as the ones in use on your cluster. It is not strictly necessary but it makes life a bit easier. You will need to upload your NOS and hypervisor installer files to the Nutanix Foundation VM. By default, there is enough disk space available on the foundation VM to hold at least one of each. It is important that the files are stored in the correct location on the VM. You can upload them from your laptop to the VM with the following SCP commands:

scp -r <NOS_filename> nutanix@foundationvm:foundation/nos

scp -r <hypervisor_filename> nutanix@foundationvm:foundation/isos/hypervisor/<hypervisorname>

Clusters

As I am actually not creating a cluster but planning to expand an existing one I do not specify a new cluster. I do click the run installation button and I get a message that informs me the imaging will take around 30 minutes.

The installer will kick off once you click the proceed button.

Just sit back and wait for the process to complete.

Tuesday, 8 September 2015

Migrating virtual machine networking from an inconsistent virtual distributed switch

Redundancy is great and a must in every design but occasionally things happen that are unforeseen.

Last week we had a situation where some end of life TOR switches were due for replacement. The first switch was removed and everything kept ticking as expected. The standby adapter on the virtual switches took over as expected. Unknown to the network engineer undertaking the work, one of the power supplies on the secondary switched had failed. Unfortunately for him he knocked the second power supply cable and all connectivity to the underlying storage was lost. The power was quickly restored and switch rebooted and most of the VM escaped unscathed.

The SQL cluster holding the vCenter database was not so lucky. It lost access to all its underlying ISCSI disks and unfortunately that meant loss of access to the vCenter database. Even though connectivity to the database was reasonably quickly restored it was not until a day later that problems became noticeable. A particular VM was no longer networking and upon inspection it became clear the network adapter's connected property was unchecked. Not a biggie one would think and all you have to do is check the box. Unfortunately this did not work and vCenter presented the following error: Invalid Configuration for Device '0'

Trying to find a solution to this problem I came across this article on the VMware KB. In this article several work arounds were explained option 1 did not work although we had some success with option 3:

Option 3

One of the possible solutions I came accross was to do a manual sync. vCenter has an option called "Rectify vNetwork Distributed Switch on Host" which allows you to manually bring the vDS back in sync.

Last week we had a situation where some end of life TOR switches were due for replacement. The first switch was removed and everything kept ticking as expected. The standby adapter on the virtual switches took over as expected. Unknown to the network engineer undertaking the work, one of the power supplies on the secondary switched had failed. Unfortunately for him he knocked the second power supply cable and all connectivity to the underlying storage was lost. The power was quickly restored and switch rebooted and most of the VM escaped unscathed.

The SQL cluster holding the vCenter database was not so lucky. It lost access to all its underlying ISCSI disks and unfortunately that meant loss of access to the vCenter database. Even though connectivity to the database was reasonably quickly restored it was not until a day later that problems became noticeable. A particular VM was no longer networking and upon inspection it became clear the network adapter's connected property was unchecked. Not a biggie one would think and all you have to do is check the box. Unfortunately this did not work and vCenter presented the following error: Invalid Configuration for Device '0'

not an actual picture of my error. I found this in the public domain.

Option 3

- SSH to the host and determine the VMID for the affected virtual machine using the command:

vim-cmd vmsvc/getallvms | grep -i VMNAME - Use the VMID from the command in step 1 to reload the configuration on the host by running the command:

vim-cmd vmsvc/reload VMID - Edit the settings of the virtual machine and connect the NIC.

It soon became clear there were all kinds of other issues. vMotioning, cloning or restoring from backup became pretty much impossible. When looking at the virtual distributed switch I noticed that it was in a warning state and that several of the hosts vDS configuration were out of sync with the vCenter vDS settings.

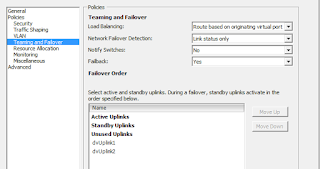

Looking further into the distributed vswitch configuration it was noticed that all settings were "null".

The vlan id had disappeared, the uplinks were set to unused, etc. Obviously this was not truly the case as every VM and VMkernel were still on the network.

One of the possible solutions I came accross was to do a manual sync. vCenter has an option called "Rectify vNetwork Distributed Switch on Host" which allows you to manually bring the vDS back in sync.

As this did not work as a solution I decided to open a support request with VMware. After repeating most of the above and submitting the usual logs I was advised that I had to rebuild the virtual distributed switch; something I had been contemplating doing.

So how do you go about migrating virtual networking between two virtual distributed switches while avoiding any outages? You can make use of the migrate virtual machine network wizard.

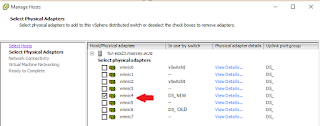

First step is to create a new vDS. You will need to give it a different name than your existing switch. Also, ensure that its configuration is exactly the same. You would not want to leave off a port group or assign an incorrect vlan id for example. I am assuming that you have two uplinks to your existing virtual distributed switch. In my case I make use of one active and one standby uplink on each virtual port group. Having these two uplinks will ensure you do not lose connectivity as we will need to disconnect one uplink.

- Go to Networking and select the inconsistent virtual distributed switch

- Under Configuration, select manage hosts. Click the desired host

- On the adapter screen deselect your primary uplink

- Click next on the following screens and accept defaults.

- Go to the newly created virtual distributed switch configuration screen

- Click Add host

- Select the same host as you selected in step 2

- Select the adapter you deselected in step 3. The standby link will still reflect the name of the current switch.

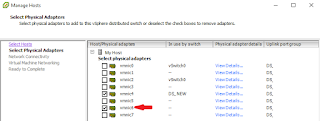

- Under the new virtual distributed switch, select manage hosts. Click the host you selected in step 2/7

- You'll notice the adapter you moved in stap 8 in selected. It now states it is in use by the new switch. You also notice that the other NIC is still in use by the old switch. Click Next

- Migrate your NFS and Vmotion vmk to the new switch.Click Next, accept other defaults and finish

- On the new vDS, select manage hosts once more. Click next until you get to Virtual machine Networking screen. Enable the check box

- Set the destination ports on the new vDS. Click Next and finish

- Add the remaining NIC to the new vDS. Go to the old switch and click manage hosts. Select the host and click next

- Deselect the NIC and click next.

- A warning will appear informing you that no physical adapters are selected for one or more hosts. Click yes. Click next on all screens and finish

- On the new vDS, click manage hosts. Select your host and click next

- Select the NIC you deselected in step 15. click next and finish

- Go to old vDS and select hosts tab. Remove from the virtual distributed switch

- Accept the warning about removing selected hosts from vDS

- Delete the old switch

I could have probably done this in fewer steps but choose not too as I had never attempted this before. You should be able to move NIC, VMK and VM in one go but I had no opportunity to test this.

Subscribe to:

Posts (Atom)