Redundancy is great and a must in every design but occasionally things happen that are unforeseen.

Last week we had a situation where some end of life TOR switches were due for replacement. The first switch was removed and everything kept ticking as expected. The standby adapter on the virtual switches took over as expected. Unknown to the network engineer undertaking the work, one of the power supplies on the secondary switched had failed. Unfortunately for him he knocked the second power supply cable and all connectivity to the underlying storage was lost. The power was quickly restored and switch rebooted and most of the VM escaped unscathed.

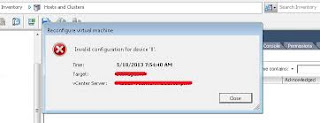

The SQL cluster holding the vCenter database was not so lucky. It lost access to all its underlying ISCSI disks and unfortunately that meant loss of access to the vCenter database. Even though connectivity to the database was reasonably quickly restored it was not until a day later that problems became noticeable. A particular VM was no longer networking and upon inspection it became clear the network adapter's connected property was unchecked. Not a biggie one would think and all you have to do is check the box. Unfortunately this did not work and vCenter presented the following error: Invalid Configuration for Device '0'

not an actual picture of my error. I found this in the public domain.

Trying to find a solution to this problem I came across this

article on the VMware KB. In this article several work arounds were explained option 1 did not work although we had some success with option 3:

Option 3

- SSH to the host and determine the VMID for the affected virtual machine using the command:

vim-cmd vmsvc/getallvms | grep -i VMNAME

- Use the VMID from the command in step 1 to reload the configuration on the host by running the command:

vim-cmd vmsvc/reload VMID

- Edit the settings of the virtual machine and connect the NIC.

It soon became clear there were all kinds of other issues. vMotioning, cloning or restoring from backup became pretty much impossible. When looking at the virtual distributed switch I noticed that it was in a warning state and that several of the hosts vDS configuration were out of sync with the vCenter vDS settings.

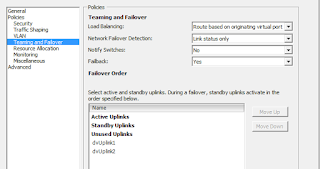

Looking further into the distributed vswitch configuration it was noticed that all settings were "null".

The vlan id had disappeared, the uplinks were set to unused, etc. Obviously this was not truly the case as every VM and VMkernel were still on the network.

One of the possible solutions I came accross was to do a manual sync. vCenter has an option called "Rectify vNetwork Distributed Switch on Host" which allows you to manually bring the vDS back in sync.

As this did not work as a solution I decided to open a support request with VMware. After repeating most of the above and submitting the usual logs I was advised that I had to rebuild the virtual distributed switch; something I had been contemplating doing.

So how do you go about migrating virtual networking between two virtual distributed switches while avoiding any outages? You can make use of the migrate virtual machine network wizard.

First step is to create a new vDS. You will need to give it a different name than your existing switch. Also, ensure that its configuration is exactly the same. You would not want to leave off a port group or assign an incorrect vlan id for example. I am assuming that you have two uplinks to your existing virtual distributed switch. In my case I make use of one active and one standby uplink on each virtual port group. Having these two uplinks will ensure you do not lose connectivity as we will need to disconnect one uplink.

- Go to Networking and select the inconsistent virtual distributed switch

- Under Configuration, select manage hosts. Click the desired host

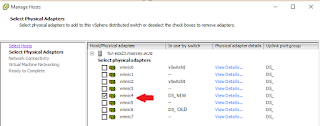

- On the adapter screen deselect your primary uplink

- Click next on the following screens and accept defaults.

- Go to the newly created virtual distributed switch configuration screen

- Click Add host

- Select the same host as you selected in step 2

- Select the adapter you deselected in step 3. The standby link will still reflect the name of the current switch.

- Under the new virtual distributed switch, select manage hosts. Click the host you selected in step 2/7

- You'll notice the adapter you moved in stap 8 in selected. It now states it is in use by the new switch. You also notice that the other NIC is still in use by the old switch. Click Next

- Migrate your NFS and Vmotion vmk to the new switch.Click Next, accept other defaults and finish

- On the new vDS, select manage hosts once more. Click next until you get to Virtual machine Networking screen. Enable the check box

- Set the destination ports on the new vDS. Click Next and finish

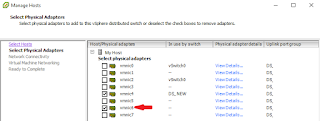

- Add the remaining NIC to the new vDS. Go to the old switch and click manage hosts. Select the host and click next

- Deselect the NIC and click next.

- A warning will appear informing you that no physical adapters are selected for one or more hosts. Click yes. Click next on all screens and finish

- On the new vDS, click manage hosts. Select your host and click next

- Select the NIC you deselected in step 15. click next and finish

- Go to old vDS and select hosts tab. Remove from the virtual distributed switch

- Accept the warning about removing selected hosts from vDS

- Delete the old switch

I could have probably done this in fewer steps but choose not too as I had never attempted this before. You should be able to move NIC, VMK and VM in one go but I had no opportunity to test this.