"Community Edition is a 100% software solution enabling technology enthusiasts to easily evaluate the latest hyperconvergence technology at zero cost. Users can now experience the same that powers the datacenters of thousands of leading enterprises around the world."

I am fortunate that I get to use Nutanix in the workplace. Unfortunately I do not have the luxury to have a Nutanix cluster in a lab environment. When I first came across Nutanix I did inquire whether there was a virtual appliance which would allow me to "play" around with the technology. Fast forward two years and Nutanix Community Edition allows me to do just that!

Last year I was lucky enough to visit Nutanix HQ in San Jose and had the honor of having a meeting with the dude himself, Steven Poitras. This is were I first heard about Acropolis and was very excited about what this had to offer. My employer is a big user of VMware so I will not be getting an opportunity to use Acropolis any time soon. Nothing wrong with VMware and since my employer is an educational institution we do get a good price on VMware licensing.

Being a Nutanix advocate I still want to learn about Acropolis and it seems Nutanix CE will allow me to do just that! After registering for the BETA I proceeded with the image download and looking over the hardware requirements. According to the documentation the minimal requirements are as follows:

The development lab I make use of is based on vSphere 5.5 instead of version 6. I also wanted to start of with a single node cluster as I am definitely not after performance and just wanted to test Acropolis functionality.

The host I ended up using was a HP DL380 G7 with dual CPU, 144 GB of RAM, a 72 GB volume and an additional 2.7 TB volume.And no SSD drives!

The install process was pretty straightforward and these are the steps I followed:

- Download the Nutanix CE installer and extract files from the ce-2015.07.16-beta.img.gz file

- Rename the ce-2015.07.16-beta.img to ce-2015.07.16-beta-flat.vmdk

- Log in to your vSphere Web Client and go to vCenter > Storage

- Upload the descriptor file ce.vmdk. Here is an example of one you can modify

- Create a new VM. Ensure you set its compatibility to ESXi 5.5 and later. Choose CentOS version 4/5/6/7 64-bit as the Operating System

- Create a new portgroup that allows for Promiscious Mode. I called it NTNX CVM

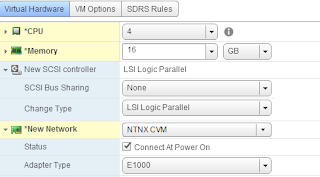

- Adjust the hardware settings:

- Change vCPU to 4 and the RAM to 16 GB

- Change the network adapter to E1000

- Set network to the port group you have created

- Set SCSI adapter to Paravirtual

- Delete the default virtual disk

- Under New Device, select Add Existing Hard Drive. Add the existing ce.vmdk to ISCSI 0:0

- Add another new hard disk (500 GB) and assign it to ISCSI0:1. This will function as my SSD drive.

- Add another new hard disk (500 GB) and assign it ISCSI0:2. This will function as my HDD drive

At this stage there is one thing left to do before we can start the install and that is to trick the CVM to believe it has access to a SSD drive. This can be achieved by applying an advanced setting.

- Go to VM > Edit Settings > VM Options > Advanced > Edit Configuration Parameters

- Click Add Row and add the following

Now you are ready to start installing Nutanix Community Edition!

- Power on the VM

- Log in with login install and no password

- The installer will now verify whether your hardware is supported. I had some issues until I made my virtual SSD 500 GB in size

- Enter your IP configuration for CVM and host.

- Check the Create single-node cluster and assign DNS address

- Make sure you read the EULA from top to bottom or it won't work :-)

- Click start

- Once the installer has finished browse to https://your-cvm:9440

- Set your password

- Once you logged in as admin and new password you will be prompted for your NEXT credentials.

- Ta Da!! Behold Acropolis

And with this we have finished our install. In the next post we will talk about post install configuration.

No comments:

Post a Comment